MARS7 Voice AI Now Available for VPC on Google Cloud Vertex AI

At CAMB.AI, we build voice AI that sounds so natural, so emotionally real, that audiences forget they’re listening to a machine. Our flagship model, MARS7, is a multilingual text-to-speech (TTS) system built for the world’s most demanding environments — from live sports and global media to customer engagement and mission-critical communications. With support for 150+ languages, voice cloning, and fine-grained emotional control, MARS7 is setting a new benchmark for what “AI voice” truly means.

And this isn’t just theory. It’s proven at the bleeding edge of live entertainment.

Just weeks ago, MARS7 powered a historic NASCAR Xfinity Series broadcast, delivering real-time multilingual commentary to millions of racing fans. English came from human commentators; Spanish came entirely from AI — live, in sync, and full of passion. Read more about this historic first in Sports Business Journal here.

🎥 Watch the NASCAR broadcast clip below to see MARS7 in action.

What NASCAR experienced is part of a growing wave. CAMB.AI’s technology has already powered global moments for Major League Soccer, the Australian Open, Comcast (NBC), IMAX, Eurovision, and Dentsu Broadcasting — events where millions tune in and where voice AI must be indistinguishable from the real thing.

Today, we’re proud to announce that the same model, MARS7, starting with 10 languages and locales, is available to run directly in your environment through Google Cloud Vertex AI Model Garden.

Why Enterprises Trust MARS7

MARS7 isn’t just another TTS model — it’s a broadcast-quality voice AI trusted in environments where failure isn’t an option. Despite its robustness and expressive performance, it’s remarkably lightweight: a ~80-million-parameter Megabyte encoder-decoder architecture designed to capture human-like prosody and range.

The result:

- Voices that connect — emotionally rich, natural delivery across any use case.

- Global reach — multilingual-first, with accents and locales for authenticity.

- Flexibility — voice cloning and fine-grained emotional tuning for different audiences

“Enterprise customers demanded broadcast‑quality voice AI they could deploy in their own environment. MARS7 on Vertex AI delivers exactly that—the same technology powering the world’s biggest sports and media brands, now running entirely within your VPC.”

— Akshat Prakash, Cofounder and CTO of CAMB.AI

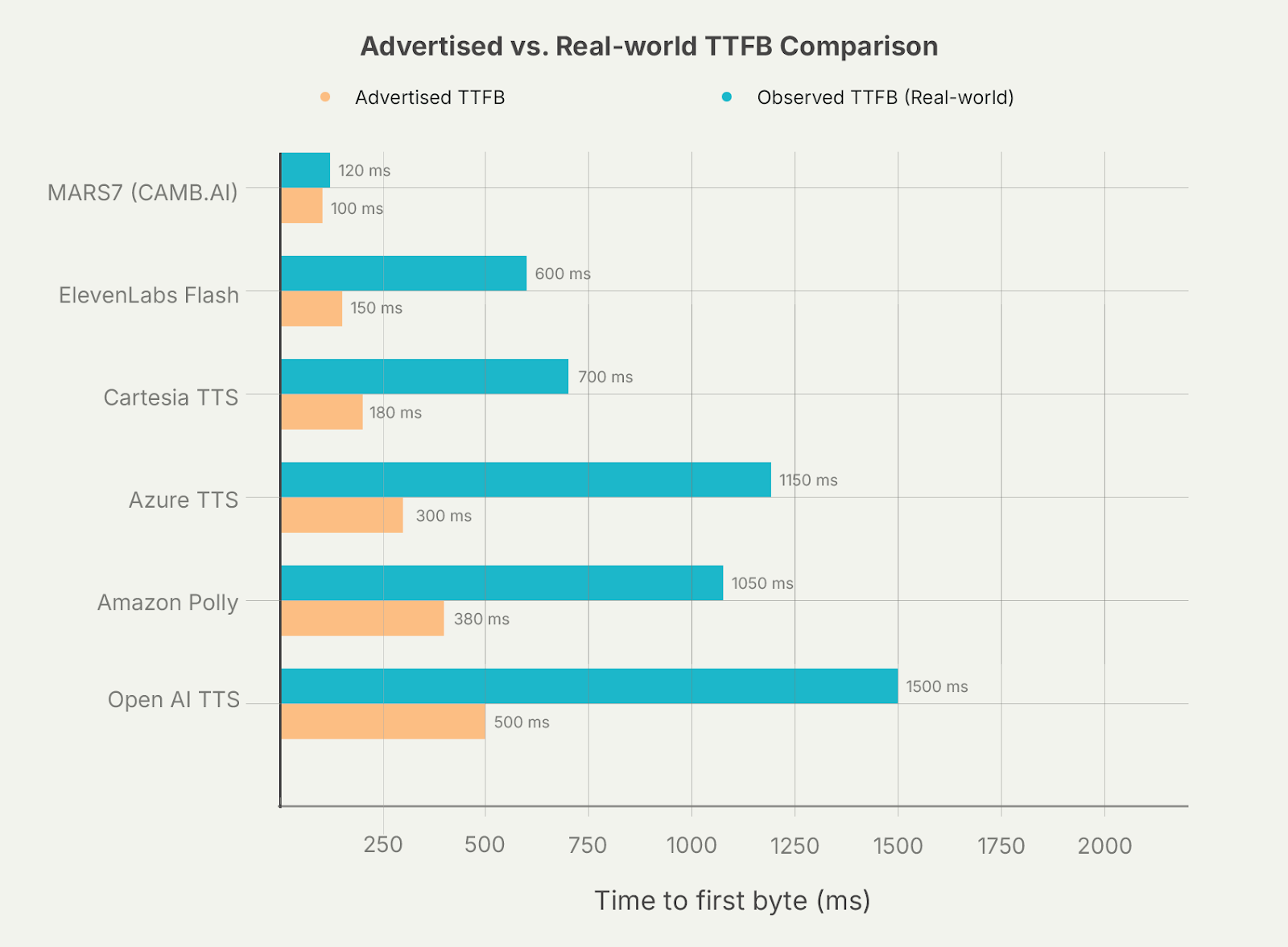

Speed That Changes Everything: Understanding TTFB and Latency and Where MARS7 Wins

In voice AI, speed is as critical as quality. If a voice assistant pauses too long before speaking, the conversation feels broken. If live commentary lags behind video, the broadcast falls out of sync. In both cases, the experience fails.

The key measure is Time to First Byte (TTFB) — the delay between sending text to a TTS API and receiving the very first sound back.

It breaks down into four parts:

- Request travel → the text has to reach the server.

- Queue wait time → most providers run all customers on shared GPU pools, so under load, your request may sit behind hundreds of others.

- Model processing → the TTS model begins generating speech (this is the theoretical TTFB most vendors advertise).

- Audio return → the first byte of audio travels back to your client.

Most providers only publish numbers for step 3. In practice, steps 1, 2, and 4 add hundreds of milliseconds — or even whole seconds. That’s why an “advertised” 150 ms TTFB often feels like 800 ms–2 seconds in real-world usage.

MARS7 on Vertex AI eliminates those hidden delays.

- Deploy close to users. With Google Cloud’s 40+ global regions, network travel is minimal.

- Dedicated GPUs. Unlike shared SaaS models, your VPC deployment runs on GPUs reserved for your workloads, with no queueing behind other customers.

- GPU choice. Select from NVIDIA A100, H100, L4, L40S and more — tuning for ultra-low latency or high throughput, depending on your use case.

- Practical = theoretical. The result: our TTFB isn’t just a spec sheet number — it’s what you actually experience.

For live sports commentary, customer support, or emergency systems, this difference is the line between speech that feels instant and speech that feels laggy.

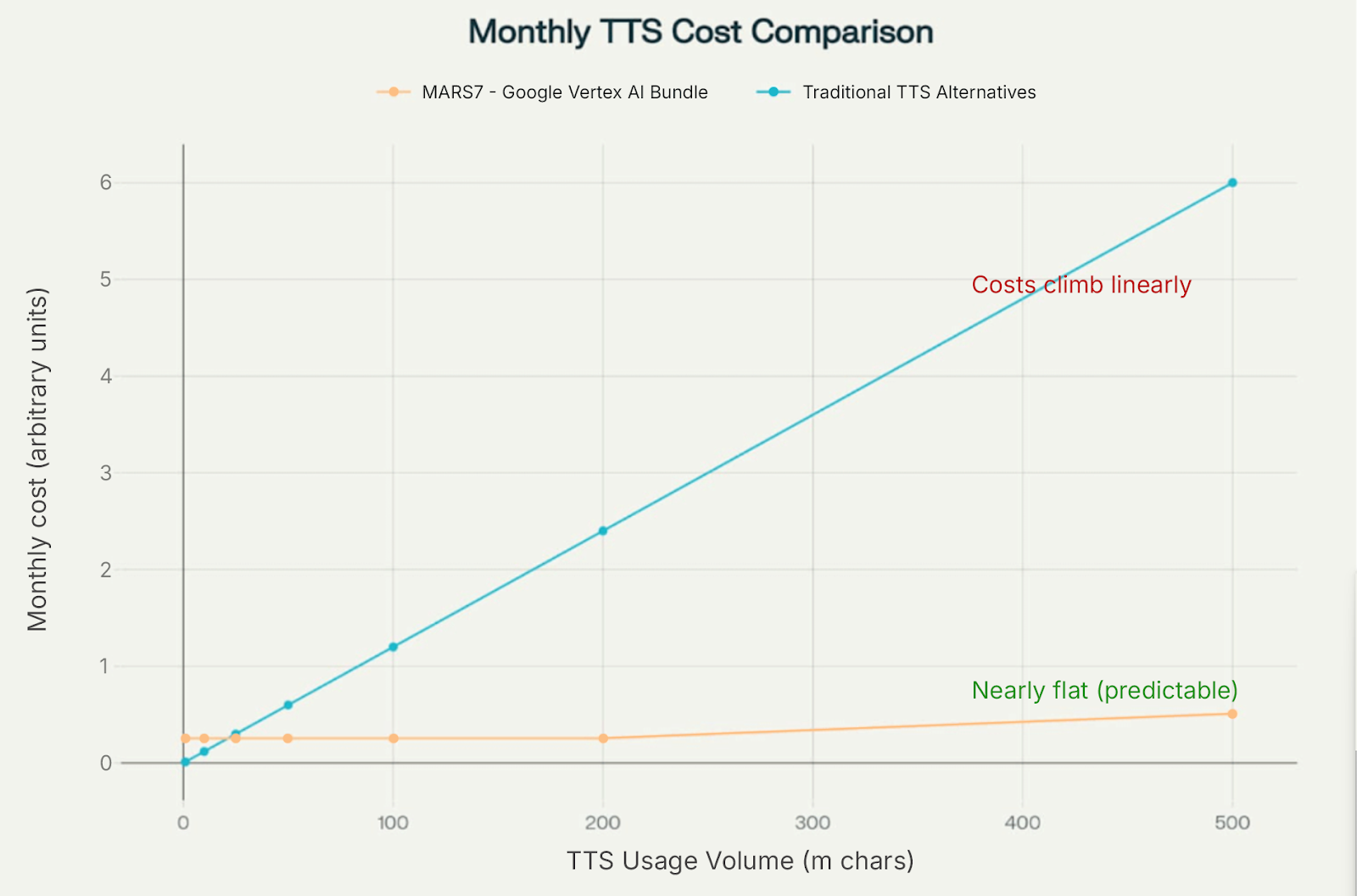

A Pricing Model That Finally Makes Sense for Enterprises

Traditional TTS pricing is built on tokens, characters, or credits. It looks straightforward at first, but at enterprise scale it quickly collapses:

- Unpredictable bills. Costs rise linearly with every character spoken.

- Punished for success. More traffic means higher spend, even though your infrastructure costs stay the same.

- Opaque rules. Multipliers for premium voices, streaming vs. batch, or “neural” models make forecasting nearly impossible.

The result? CFOs can’t budget, and product teams are forced to throttle usage to control costs.

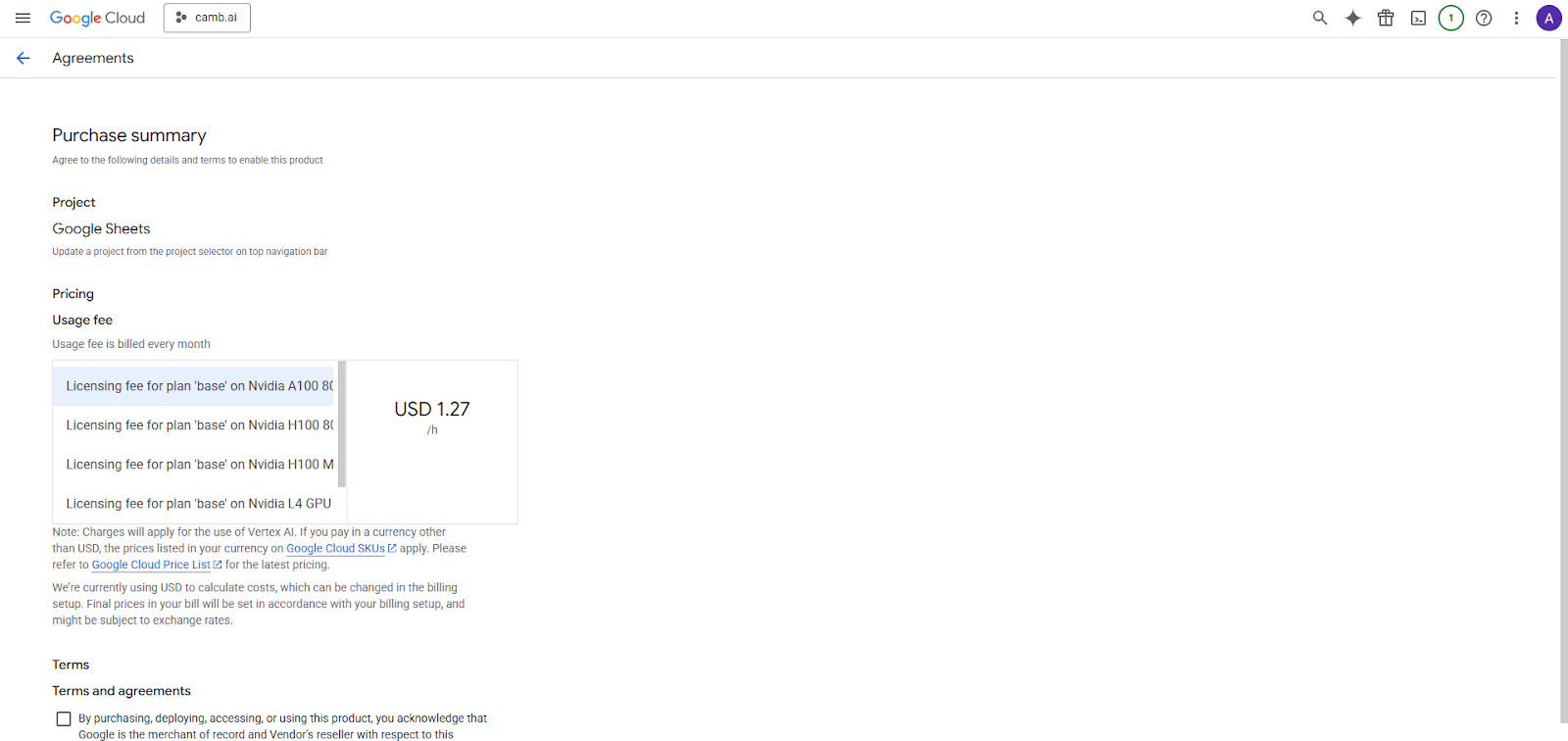

With MARS7 on Vertex AI, the economics flip:

- GPU-first economics. You provision GPUs on Vertex AI (A100, H100, L4, L40S, etc.) the same way you provision GPU compute for any workload.

- Simple licensing. CAMB.AI charges a fixed % of GPU consumption per hour. Since GPU capacity is pre-allocated, your spend is nearly flat, regardless of how many characters you generate.

- Unlimited inference. Run millions of real-time API calls or synthesize entire libraries — your cost doesn't balloon with usage.

- Predictable budgets. CFOs can forecast costs as cleanly as compute or storage.

- Scale without penalty. Whether traffic spikes or libraries grow, your costs don't spiral.

- Freedom to innovate. Teams can experiment and expand without fearing runaway bills.

Need assistance choosing the optimal configuration for your workload? Our team is happy to help. Schedule a meeting with our experts and we’ll help you get started.

Your Environment, Your Control: The VPC Security, Privacy and Compliance Advantage

For enterprises, performance alone isn’t enough. Security, compliance, and data sovereignty are non-negotiable. Yet most SaaS TTS providers operate from multi-tenant environments:

- Your text leaves your perimeter. Sensitive inputs are processed on external servers.

- Shared infrastructure. Multiple customers share GPU pools, raising compliance questions.

- Regulatory friction. Industries like finance, healthcare, and government face strict requirements (HIPAA, GDPR, FedRAMP) that make external processing unacceptable.

With VPC deployment, MARS7 runs entirely within your own cloud environment:

- Complete data sovereignty. Text never leaves your VPC. All processing, logging, and monitoring stay under your governance.

- Single-tenant security. Dedicated GPU resources ensure isolation from other tenants.

- Compliance alignment. Run under your existing frameworks without third-party risk.

For organizations where data trust is paramount, this control isn’t optional — it’s mandatory. With MARS7 on Vertex AI, you get enterprise-grade voice AI without compromise.

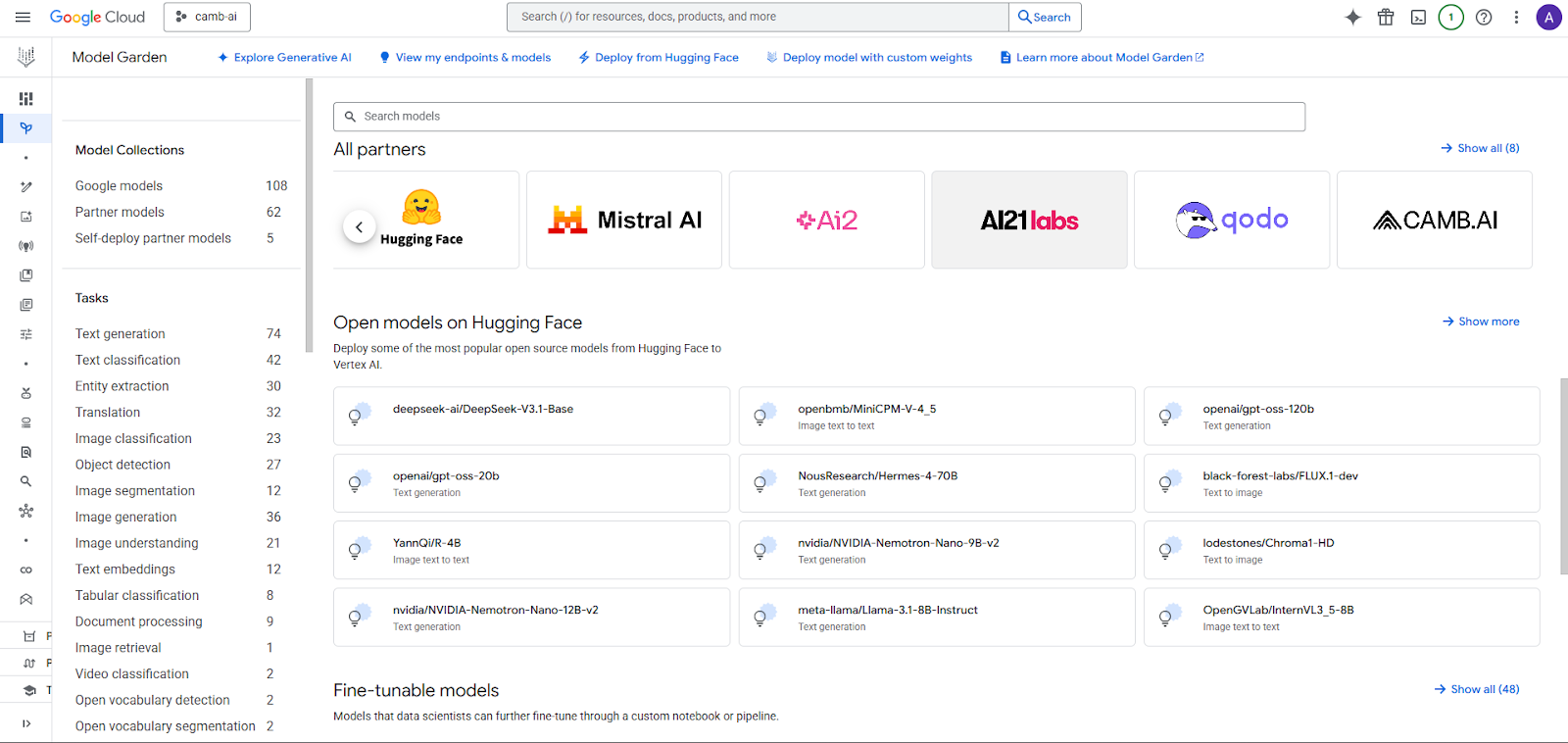

Simple Deployment, Powerful Results

Getting MARS7 running in your environment is straightforward:

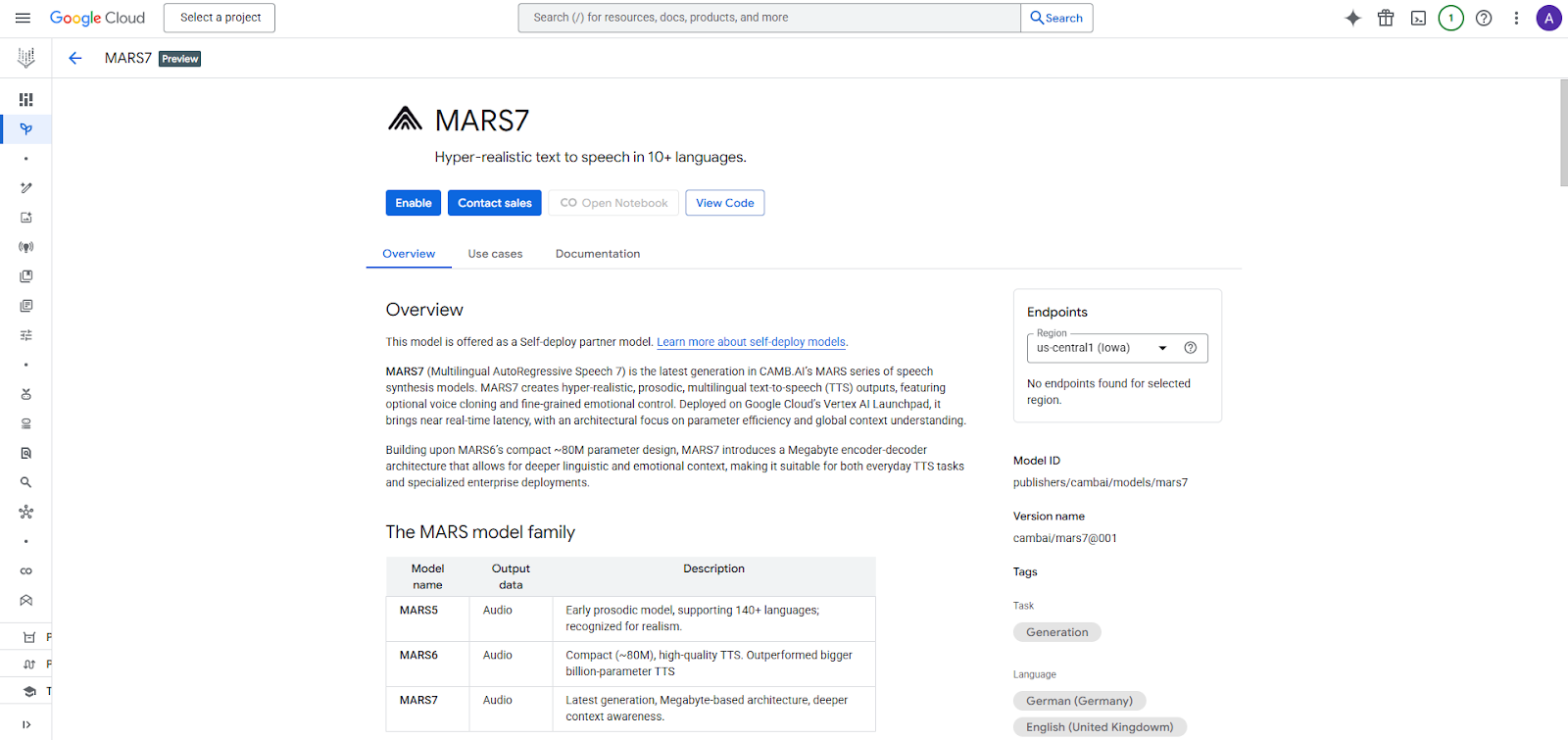

- In the Google Cloud Console, open Vertex AI Model Garden.

- Select Self‑deploy partner models to view VPC deployment options.

- Choose MARS7 from the available models.

- Click Enable to purchase your license.

- Click Deploy to launch MARS7 in your VPC.

That’s it. Within minutes, MARS7 runs entirely within your infrastructure, accessible through standard APIs your team already knows.

🎥 Watch how to deploy MARS7 in your VPC in a few simple steps.

Proven Impact: Real‑World Success Stories on Vertex AI

MARS7 on Vertex AI is already transforming how businesses across industries create and scale content.

- Instreamatic — a leader in AI-powered audio advertising — deployed MARS7 on Vertex AI and achieved:

- Projected to cut 60% cost on voice production

- +22 percentage points in brand favorability

- AI-generated ads outperforming human-recorded ones

🎥 Watch the Instreamatic overview video

- Koyal AI built an end-to-end script-to-audio-to-video pipeline with MARS7, producing multilingual content in days that would have taken traditional studios weeks. They’re not just cutting costs — they’re unlocking new creative workflows that weren’t possible before.

These aren’t experiments. They’re proof that multilingual livestreaming AI voice is ready for enterprises and startups, at scale.

Getting Started with MARS7 in Your VPC

Whether you’re building the next generation of customer experiences or transforming how your organization creates content, MARS7 delivers uncompromising quality with complete control, speed and privacy.

Ready to deploy MARS7 in your VPC? Visit the Google Cloud Vertex AI Model Garden to get started and experience multilingual voice AI that runs entirely in your environment.

Whether you're a media professional or voice AI product developer, this newsletter is your go-to guide to everything in speech and localization tech.

Related Articles